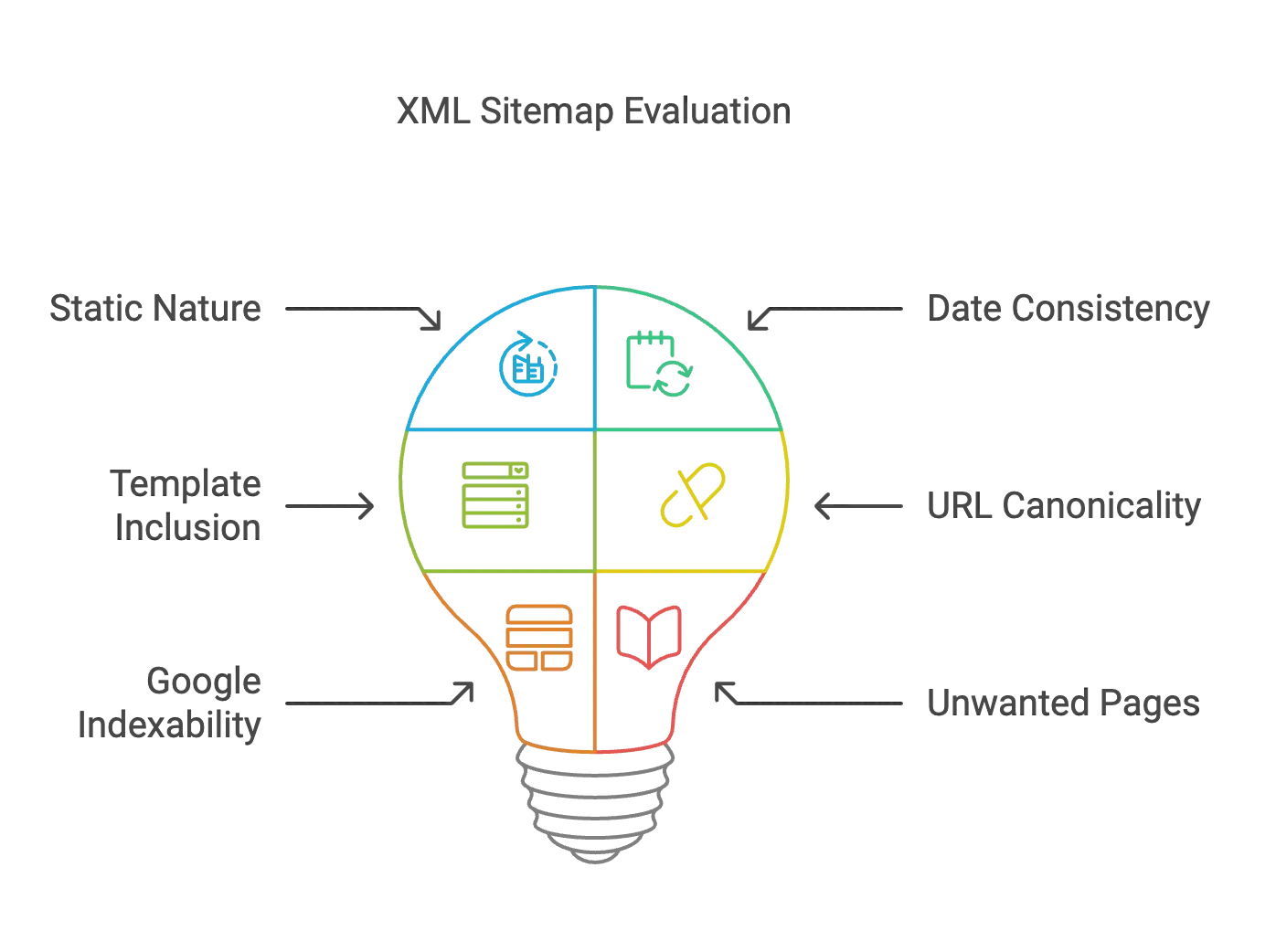

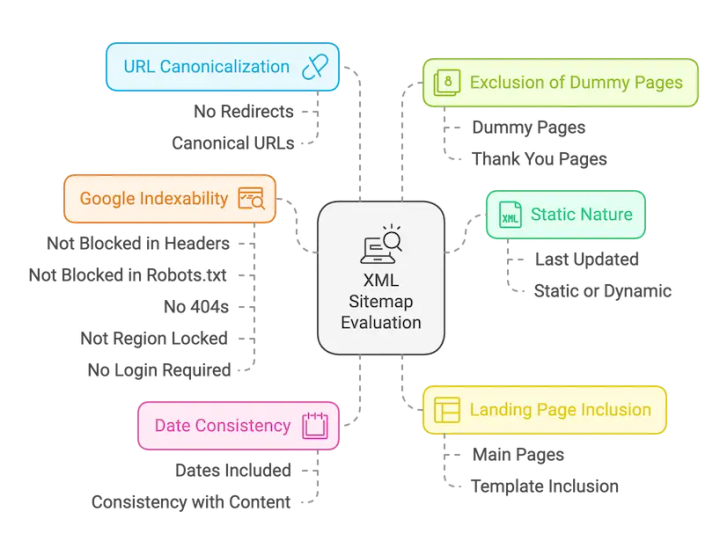

After auditing more websites than I can count, one of the first places I always check is the XML sitemap. It gives me a quick snapshot of the website’s structure and often serves as a reflection of its overall optimization state. I’m frequently surprised by how many crucial elements are mishandled or overlooked—especially by some CMS and e-commerce platforms. I’m not looking for perfection, just "good enough" but one day, I’d love to have full control over how a brand nails this foundational piece.

Think of an XML sitemap as a prioritized list of URLs you want Google to crawl. It tells search engines where to start, what to focus on, and what to skip. You can even use it to include extra information, like images, hreflang, and video metadata.

An XML sitemap doesn’t need to include every single URL on your site, but if you want something crawled quickly, it absolutely belongs there. To find all a website's XML sitemaps, you’ll need to check with developers or Google Search Console. They do not have to sit at the default location or even be listed in the robots.txt file (although they are good places to check). In fact, many companies skip adding them there altogether to reduce the risk of competitors scraping them.

Smaller websites shouldn’t need an XML sItemap, however for most large websites it is a strong recommendation to have a good XML sitemaps submitted to Google and maintained.

Only canonical URLs

The URLs in your XML sitemap should align with your canonical tags and, ideally, your internal linking structure. This ensures that Google only needs to crawl one version of each page to access your content, rather than wasting resources on multiple minor variants. The result? Better rankings, faster.

If the URL in your sitemap doesn’t match the canonical tag, that page is unlikely to be indexed. This mismatch is often caused by issues like:

- Paginated pages

- Trailing slashes not matching (e.g., /product1 vs. /product1/)

- Dashes not matching, such as using an em dash instead of a hyphen

- Underscores instead of dashes

- Case discrepancies (/Product1/ vs (/product1/)

- Protocol or subdomain mismatches

A common oversight I’ve come across is sitemaps listing URLs with http instead of https. Thankfully, tools like Screaming Frog or Sitebulb make it easy to audit your XML sitemap and catch these issues before they cause problems.

Only indexable URLs

Every URL in your XML sitemap should return a 200 status code on its first crawl, ensuring it’s accessible to Google (or your target search engine). Including non-indexable URLs, broken links, or URLs blocked by robots.txt is not only pointless but wastes valuable crawl budget.

That said, there are exceptions to this rule. While your sitemap should generally represent URLs you want indexed, there are times you might include redirects or URLs returning a 404 or 410 status code. These cases are usually temporary, creating what I like to call a "dirty" XML sitemap to manage transitions or cleanup effectively.

Created, not updated (and never removed)

Whenever I see an XML sitemap labeled "created by Screaming Frog," it raises a red flag. Don’t get me wrong—Screaming Frog is a fantastic tool—but only if it’s used properly. Static XML sitemaps require regular updates as your site evolves. If they aren’t updated, they quickly become obsolete.

All too often, I come across sitemaps that were generated once, submitted to Google, and then left to rot. A quick search can reveal outdated examples, like:

- Leeds Building Society’s savings sitemap: Created with Screaming Frog v3! All pages are gone, though they return 302s. https://www.leedsbuildingsociety.co.uk/sitemaps/savings-sitemap.xml

- Virgin Media’s cable sitemap: Similarly outdated. https://www.virginmedia.com/cable.sitemap.xml

- Clarks Shoes has a comparable issue, though most of their links are still valid.

If you’re auditing XML sitemaps, here’s a tip: check the "last modified" dates, if available. A date older than a few months suggests it hasn’t been updated in a while. Also, if the sitemap mentions Screaming Frog, check the version number—it’s a clear giveaway of how long it’s been sitting untouched. As of now, the latest version is 21, so anything older is suspect.

Parameters not representative - Date & Update frequency

An XML sitemap can include three optional parameters—frequency, priority, and last modified—but only the URL is truly necessary. As Mark Williams-Cook noted, Google either trusts the data you provide or it doesn’t, making these parameters potentially useful, but only if implemented correctly.

In theory, these parameters should communicate:

- How often the content is updated.

- When it was last modified.

Yet in practice, this is often poorly executed. For example, blog posts might be marked as updated daily (highly unlikely), and the "last modified" date might be the same for every page on the site—even static ones like terms and conditions or privacy policies. This lack of accuracy can skew how Google prioritizes its crawling, which is critical for large e-commerce websites. You don’t want Google wasting resources re-crawling unchanged URLs daily instead of focusing on genuinely new or updated content.

If your sitemap claims that all 1,256 blog posts were updated in a single day, it looks suspicious and undermines trust in the data. Google will still re-crawl old URLs as needed, and forcing a recrawl won’t improve results.

Google has explicitly stated that it now ignores change frequency and priority but will respect the last modified date if it appears accurate. For this, you will probably need to generate your XML sitemap dynamically as an output from your CMS to ensure the data reflects reality.

Thank You, In Development, & Error Page Templates

It might seem obvious, but pages that aren’t intended as landing pages—like form submission "thank you" pages or iframes—shouldn’t be included in your XML sitemap. A quick scan for URLs containing "thankyou" is a good way to spot these.

The same logic applies to in-development or test pages. If you don’t want random internet users or search engines stumbling across them, don’t put them in the sitemap! Simple as that.

Linking to the sitemap from the footer

If you want search engines to find your XML sitemap, the best practice is to submit it through webmaster tools—Google Search Console for Google or Bing Webmaster Tools for Bing. There’s no need to link to it from your site’s footer, as sitemaps are intended for search engines, not users. Keeping them out of your footer also helps maintain a cleaner and more user-focused navigation.

Not submitting to all relevant Search Engines

At a minimum, you should submit your XML sitemap to both Google and Bing. It’s quick and easy, especially since Bing allows you to verify your site using your existing Google Search Console account. This integration streamlines the process and ensures your sitemap is visible to two of the biggest search engines.

For Bing setup details, check out their guide:

Beyond Google and Bing, consider submitting your sitemap to other search engines based on your target audience’s usage. For example, Yandex and Baidu are strong candidates for markets where they dominate.

All your sitemaps URLs in one unsorted Sitemap

While it’s not technically wrong to include all your URLs in a single XML sitemap, it’s far more effective to segment them by page type or template. For example, if you’re running an e-commerce site, consider splitting your sitemaps into separate files for products, categories, and blog posts. This segmentation allows you to monitor coverage more effectively in tools like Google Search Console and Bing Webmaster Tools. By analyzing coverage by template, you can identify issues more easily and improve your technical SEO reporting.

In an ideal scenario, you could even segment further—for example, breaking categories into individual sitemaps. While this level of detail can enhance visibility, it’s often a technical challenge to implement.

Sitemap Best Practices:

- Segment sitemaps by page type (template).

- Limit each sitemap to 50,000 URLs.

- Keep each sitemap under 50MB uncompressed.

You can use a sitemap index file to manage multiple sitemaps, which acts as a directory for search engines. However, be aware that if an XML sitemap has already been submitted to Google, it will continue reporting on that sitemap’s presence—even if you later remove or update it. Plan your structure carefully to avoid confusion down the line.

All crawlable pages

While it’s not exactly a mistake, I’ve often seen audits where there’s an overzealous attempt to include every crawlable page, all paginated pages, and sometimes even all the faceted navigation. While this approach might seem sound, the return on investment (ROI) can be lower than expected.

Once Google crawls a page, it will automatically discover and crawl related pages through internal links. So, rather than focusing on crawling every page in a category, for example, it makes more sense to prioritize the first pages of category listings over page 12, especially if all the products or linked pages are already in the XML sitemap. This approach helps direct Google’s attention to the most important content first, improving crawl efficiency and reducing unnecessary complexity in your sitemap.

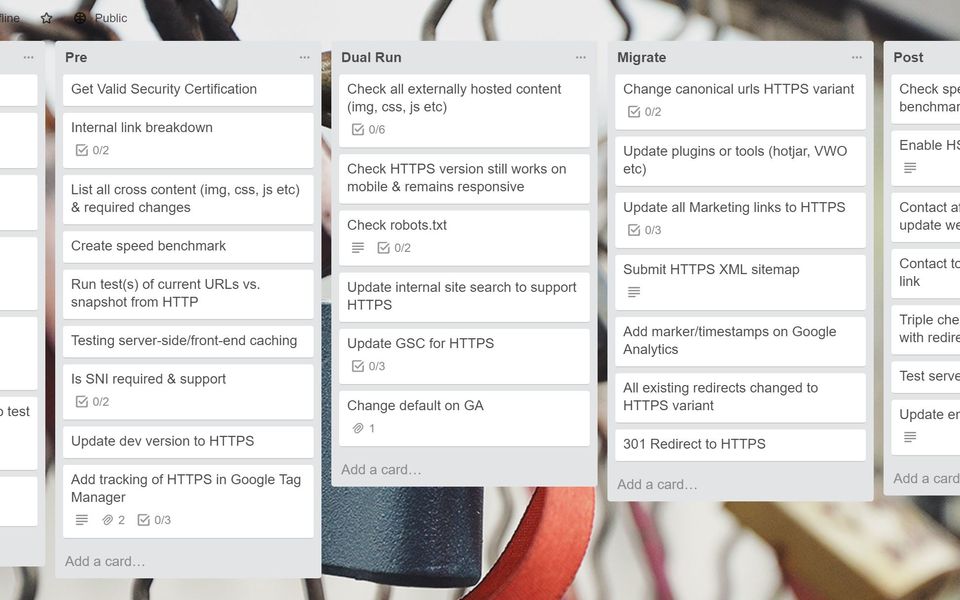

Breaking the Rules During a Migration: A Strategic Opportunity

If you’re managing a migration, this is one instance where it might make sense to break the usual rules. In an ideal world, you have the chance to create dynamic sitemaps tailored to your migration needs. With a combination of Google Search Console data and a dynamic sitemap, you can track which URLs Google has yet to crawl from the old domain, which ones need to be recrawled to consolidate authority on the new domain, and whether any pages with valuable backlinks need to be preserved.

For key URLs—especially those with priority terms or existing backlinks—you might want to temporarily direct Google to non-canonical pages or 301 redirects. This is a short-term solution designed to ensure that Google doesn’t overlook important content during the transition. Once you’re confident that most of the traffic has shifted to the new domain (around 99%), it’s time to remove the XML sitemaps from the old domain and complete the migration cleanup.

Auditing XML sitemaps

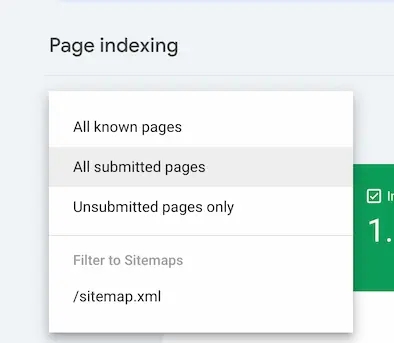

First check your XML sitemaps in Google Search Console, and in Bing, if this is already complete you will have a lot of insights in the coverage tab

Check your XML Sitemaps in Google Search Console

- Click on the Sitemaps from the menu, and then order by status to check they are all readable and available, action any that aren’t

- Click on the ‘Pages’ section next under indexing, and filter this to All submitted pages, you can then check how many Google are finding can not be indexed and for what reason.

- Change this to Unsubmitted pages only, and here you will see if there are a large volume of pages missing from the XML sitemaps that should be included

Validate your XML Sitemap using an XML Validator - Using an XML sitemap validator can help identify syntax errors, malformed URLs, and other issues that could prevent search engines from properly processing the sitemap Use specialist SEO Tools You should also run your XML sitemaps through Screaming Frog or Sitebulb to get a deeper understanding of these URLs performance.

You can certainly do more with XML sitemaps, but mastering these fundamentals is crucial for any website looking to improve its search engine visibility. Getting these basics right will set a solid foundation for your broader SEO strategy.

Other SEO Experts Opinions on XML Sitemaps

"A common issue I’ve seen with XML sitemaps is when they are not updated regularly to add new pages or if significant changes (like a load of redirects) are added to the site. Having a dynamic sitemap over a static XML sitemap can help with this but it’s important to spot check this also as sometimes pages that are not meant to be in there can creep in. The key is regular sitemap basis if you are making changes to the site on a very regular basis and rectifying any errors that occur as swiftly as possible. An example that I’ve seen is parameter URLs being included in the XML sitemap on a Shopify site, despite these pages being canonicalized to the parent category AND noindexed. This is just sending a bunch of confusing signals to Google. Clean up your XML sitemaps and keep them clean!"

Sophie Brannon - SEO Consultant and Director of SEO at Rush Order Tees

"Although tools such as Yoast are great for keeping on top of sitemap management, I believe it's still important (especially with larger sites) to have a technical SEO expert review this periodically to ensure that your sitemap is optimised the best it can be for search engines."

Charlie Clark Minty Digital

Gerrys final thoughts

Remember, the world of SEO is constantly evolving, and staying on top of best practices can be challenging. If you find yourself needing expert guidance or assistance in optimizing your XML sitemaps—or any other aspect of your SEO strategy—don’t hesitate to reach out to an experienced SEO consultant. As an SEO professional with years of experience in this field, I’m always here to help. Feel free to contact me, Gerry White, for personalized advice tailored to your website’s unique needs.

By focusing on these XML sitemap essentials and seeking expert help when needed, you’ll be well on your way to improving your website’s visibility and performance in search engine results.